Image: Owlie Productions / Shutterstock.com

The AI chatbot ChatGPT from Delivery AI has triggered the hype surrounding generative man made intelligence and dominates mighty of the media coverage.

Nonetheless, as well to the AI units from Delivery AI, there are other chatbots that deserve attention. And unlike ChatGPT, these are also available for local expend on the PC and can even be aged freed from fee for a limiteless time length.

We’ll existing you four local chatbots that also bustle on older hardware. You might per chance per chance per chance well well seek the recommendation of with them or fetch texts with them.

The chatbots presented right here in overall encompass two components, a entrance pause and an AI model, the gargantuan language model.

You pick out which model runs in the entrance pause after installing the tool. Operation is no longer refined while you know the basics. Nonetheless, about a of the chatbots provide very intensive setting alternate suggestions. Using these requires knowledgeable files. Nonetheless, the bots can even additionally be operated effectively with the identical outdated settings.

Watch also: What’s an AI PC, precisely? We reduce support thru the hype

What local AI can dwell

What it’s seemingly you’ll request from a local gargantuan language model (LLM) also relies on what you provide it: LLMs need computing vitality and a form of RAM with a thought to acknowledge mercurial.

If these requirements are no longer met, the gargantuan units is no longer going to even originate and the shrimp ones will take an agonizingly very prolonged time to acknowledge. Issues are faster with a present graphics card from Nvidia or AMD, as most local chatbots and AI units can then use the hardware’s GPU.

Whenever you supreme absorb a frail graphics card on your PC, the total lot need to be calculated by the CPU — and that takes time.

Whenever you supreme absorb 8GB of RAM on your PC, it’s seemingly you’ll supreme originate very shrimp AI units. Though they are able to provide lovely solutions to a different of easy questions, they mercurial bustle into considerations with peripheral matters. Computers that provide 12GB RAM are already reasonably lovely, nevertheless 16GB RAM or more is even higher.

Then even AI units that work with 7 to 12 billion parameters will bustle simply. You might per chance per chance per chance well well in overall acknowledge what number of parameters a model has by its determine. At the pause, an addition honest like 2B or 7B stands for 2 or 7 billions.

Advice for your hardware: Gemma 2 2B, with 2.6 billion parameters, already runs with 8GB RAM and with out GPU strengthen. The outcomes are in overall instant and effectively structured. In expose for you an even much less annoying AI model, it’s seemingly you’ll expend Llama 3.2 1B in the chatbot LM Studioas an illustration.

In case your PC is equipped with a form of RAM and a rapid GPU, strive Gemma 2 7B or a relatively of increased Llama model, honest like Llama 3.1 8B. You might per chance per chance per chance well well load the units thru the chatbots RevengeGPT4Allor LM Studio.

Data on the AI units for the Llama files can even additionally be stumbled on below. And for your files: ChatGPT from Delivery AI is no longer available for the PC. The apps and PC instruments from Delivery AI ship all requests to the internet.

The largest steps

Using the diverse chatbots is intensely identical. You install the tool, then load an AI model thru the tool and then swap to the chat condominium of the program. And you’re in a position to transfer.

With the Llamafile chatbot, there is not any longer this kind of thing as a need to download the model, as an AI model is already built-in in the Llamafile. Here is why there are several Llamafiles, every with a honest appropriate model.

Watch also: The AI PC revolution: 18 notable phrases you would possibly want to know

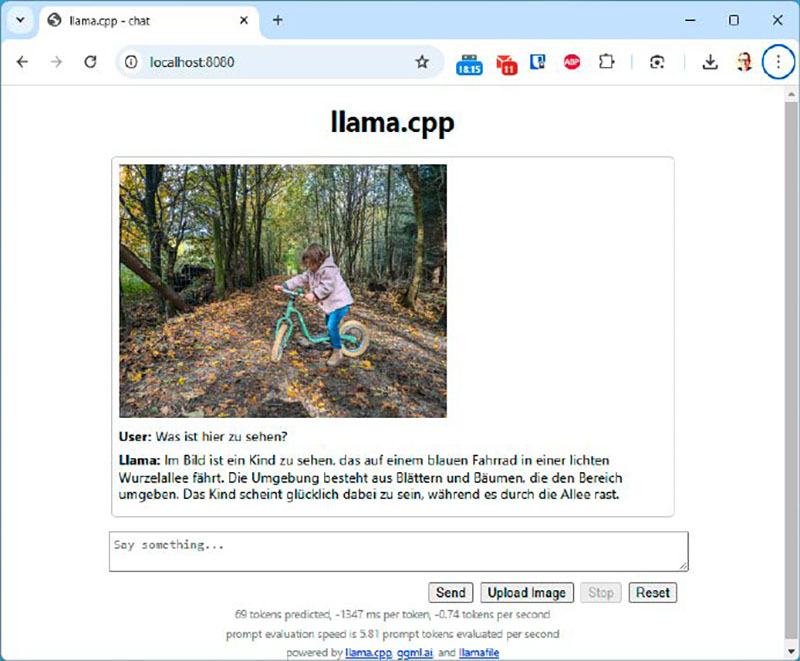

Llamafile

Llamafiles are the absolute top design to talk with a local chatbot. The design of the mission is to fetch AI accessible to all and sundry. That’s why the creators pack the total wanted files, i.e. the entrance pause and the AI model, trusty into a single file — the Llamafile.

This file supreme desires to be started and the chatbot can even additionally be aged in the browser. Nonetheless, the user interface is no longer very lovely.

The Llamafile chatbot is equipped in diverse versions, every with diverse AI units. With the Llava model, it’s seemingly you’ll per chance also integrate pictures into the chat. General, Llamafile is unassuming to expend as a chatbot.

IDG

Easy installation

Ideally suited one file is downloaded to your computer. The file determine differs looking on the model chosen.

As an illustration, while you absorb chosen the Llamafile with the Llava 1.5 model with 7 billion parameters, the file is called “lava-v1.5-7bq4.lafile.” Because the file extension .exe is missing right here, or no longer it’s far wanted to rename the file in Home windows Explorer after downloading.

You might per chance per chance per chance well well ignore a warning from Home windows Explorer by clicking “Yes.” The file determine will then be: “llava-v1.5-7b-q4.llamafile.exe.” Double-click on the file to originate the chatbot. On older PCs, it’s going to also take a moment for the Microsoft Defender Smartscreen to subject a warning.

Click on on “Lumber anyway.” A rapid window opens, nevertheless right here’s supreme for the program. The chatbot does no longer absorb its non-public user interface, nevertheless ought to be operated in the browser. Delivery your default browser if it’s no longer started automatically and enter the address 127.0.0.1:8080 or localhost:8080.

In expose for you to expend a honest appropriate AI model, or no longer it’s far wanted to download a honest appropriate Llamafile. These can even additionally be stumbled on on Llamafile.ai additional down the internet page in the “Other instance llamafiles” table. Each and each Llamafile desires the file extension .exe.

Speaking to the Llamafile

The user interface in the browser reveals the setting alternate suggestions for the chatbot at the pause. The chat enter is found at the bottom of the internet page below “Affirm one thing.”

Whenever you absorb started a Llamafile with the model Llava (llava-v1.5-7b-q4.llamafile), it’s seemingly you’ll no longer supreme chat, nevertheless also absorb pictures explained to you thru “Upload Image” and “Send.” Llava stands for “Orderly Language and Imaginative and prescient Assistant.” To pause the chatbot, simply conclude the rapid.

Tip: Llava files can even additionally be aged by yourself community. Delivery the chatbot on a mighty PC on your condominium community. Manufacture certain that that the other PCs are licensed to get entry to this computer. You might per chance per chance per chance well well then expend the chatbot from there thru the internet browser and the address “:8080”. Change with the address of the PC on which the chatbot is operating.

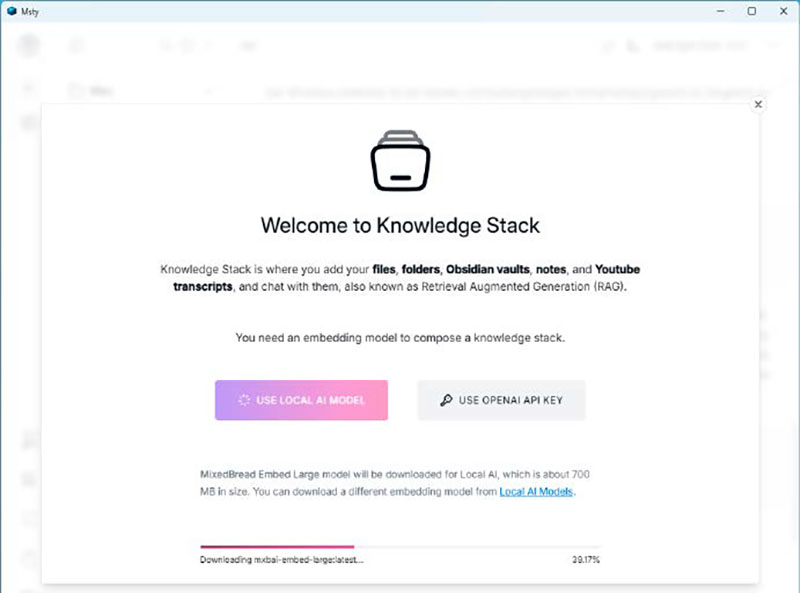

Revenge

Revenge provides get entry to to many language units, lovely user steering, and the import of your non-public files to be used in the AI. No longer the total lot is self-explanatory, nevertheless it definitely is unassuming to expend after a rapid familiarization length.

In expose for you to fetch your non-public files available to the AI purely in the community, it’s seemingly you’ll dwell this in Msty in the so-called Data Stack. That sounds relatively pretentious. Nonetheless, Msty actually provides per chance the most uncomplicated file integration of the four chatbots presented right here.

IDG

Set up of Msty

Revenge is equipped for download in two versions: one with strengthen for Nvidia and AMD GPUs and the other for operating on the CPU supreme. Whenever you originate the Msty installation wizard, you absorb the different between a local installation (“Dwelling up local AI”) or an installation on a server.

For the local installation, the Gemma 2 model is already chosen in the lower phase of the window. This model is supreme 1.6GB in dimension and is fancy minded for textual announce material creation on weaker hardware.

Whenever you click on “Gemma2,” it’s seemingly you’ll make a selection on between five other units. Later, many more units can even additionally be loaded from a clearly organized library thru “Native AI Items,” honest like Gemma 2 2B or Llama 3.1 8B.

“Browse & Accumulate Online Items” affords you get entry to to the AI pages www.ollama.com and www.huggingface.com and therefore to most of the free AI units.

A diverse feature of Msty is that it’s seemingly you’ll seek files from several AI units for recommendation at the identical time. Nonetheless, your PC need to absorb ample reminiscence to acknowled ge mercurial. Otherwise it’s seemingly you’ll absorb to motivate a actually prolonged time for the accomplished solutions.

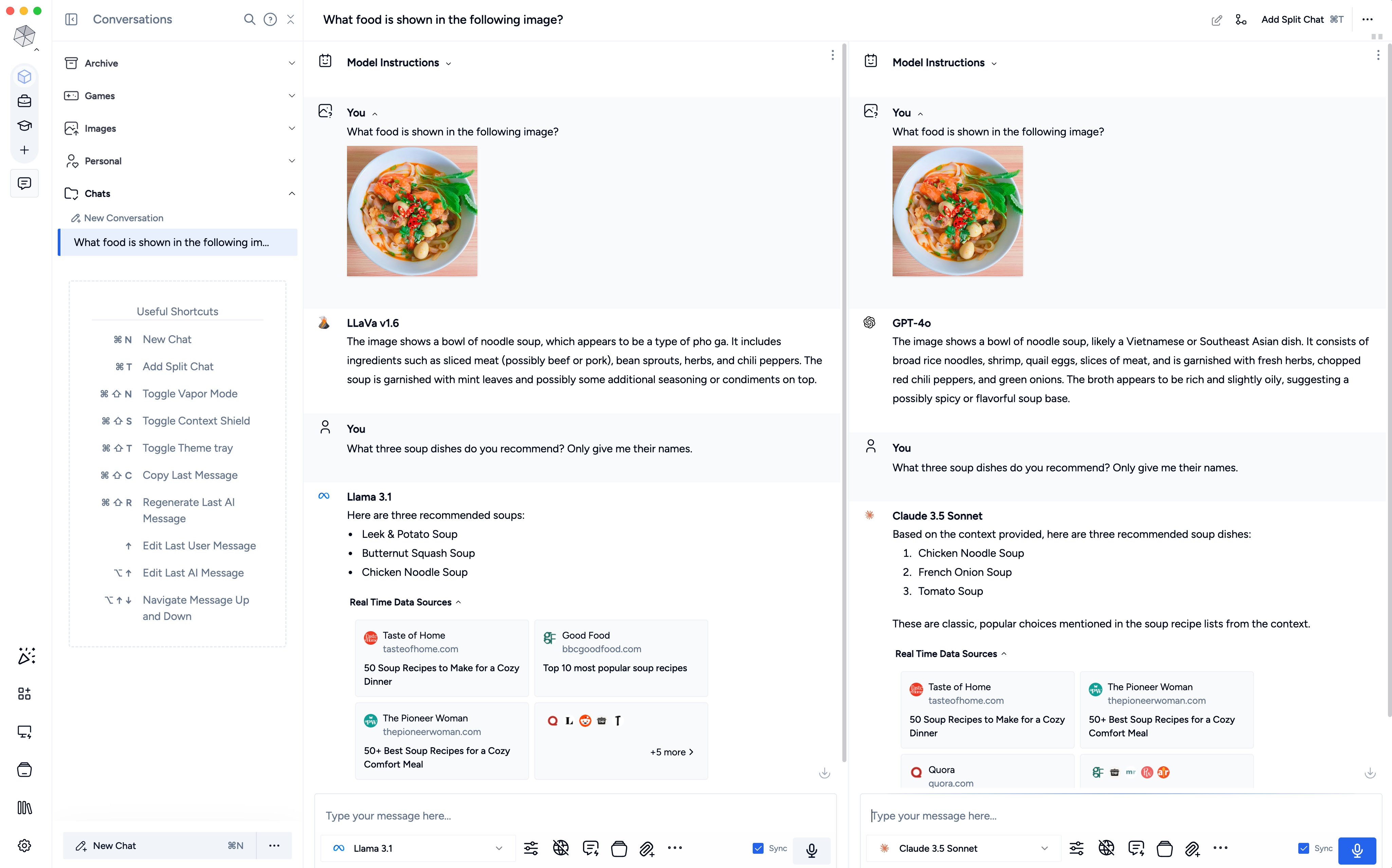

Revenge

Handsome interface, many of substance

Msty’s user interface is appealing and effectively structured. Clearly, no longer the total lot is today obvious, nevertheless while you fetch yourself familiar with Msty, it’s seemingly you’ll expend the tool mercurial, integrate new units, and integrate your non-public files. Msty provides get entry to to the diverse, in overall cryptic alternate suggestions of the particular person units, as a minimum in part in graphical menus.

In addition: Msty provides so-called splitchats. The user interface then displays two or more chat entries next to 1 one more. A diverse AI model can even additionally be chosen for every chat. Nonetheless, you supreme absorb to enter your ask as soon as. This helps you to overview several units with every other.

Add your non-public files

You might per chance per chance per chance well well with out explain integrate your non-public files thru “Data Stacks.” You might per chance per chance per chance well well make a selection on which embedding model need to put together your files for the LLMs.

Mixedbread Embed Orderly is aged by default. Nonetheless, other embedding instruments can even additionally be loaded. Care need to be taken when deciding on the model, nevertheless, as online embedding units can even additionally be chosen, as an illustration from Delivery AI.

Nonetheless, this implies that your files is disbursed to Delivery AI’s servers for processing. And the database created along with your files might per chance per chance be online: Every enquiry then also goes to Delivery AI.

Chat along with your non-public files: After getting added your non-public paperwork to the “Data Stacks,” pick out “Join Data Stack and Chat with them” below the chat enter line. Tick the sphere in entrance of your stack and seek files from a ask. The model will search thru your files to search out the resolution. Nonetheless, this does no longer work thoroughly yet.

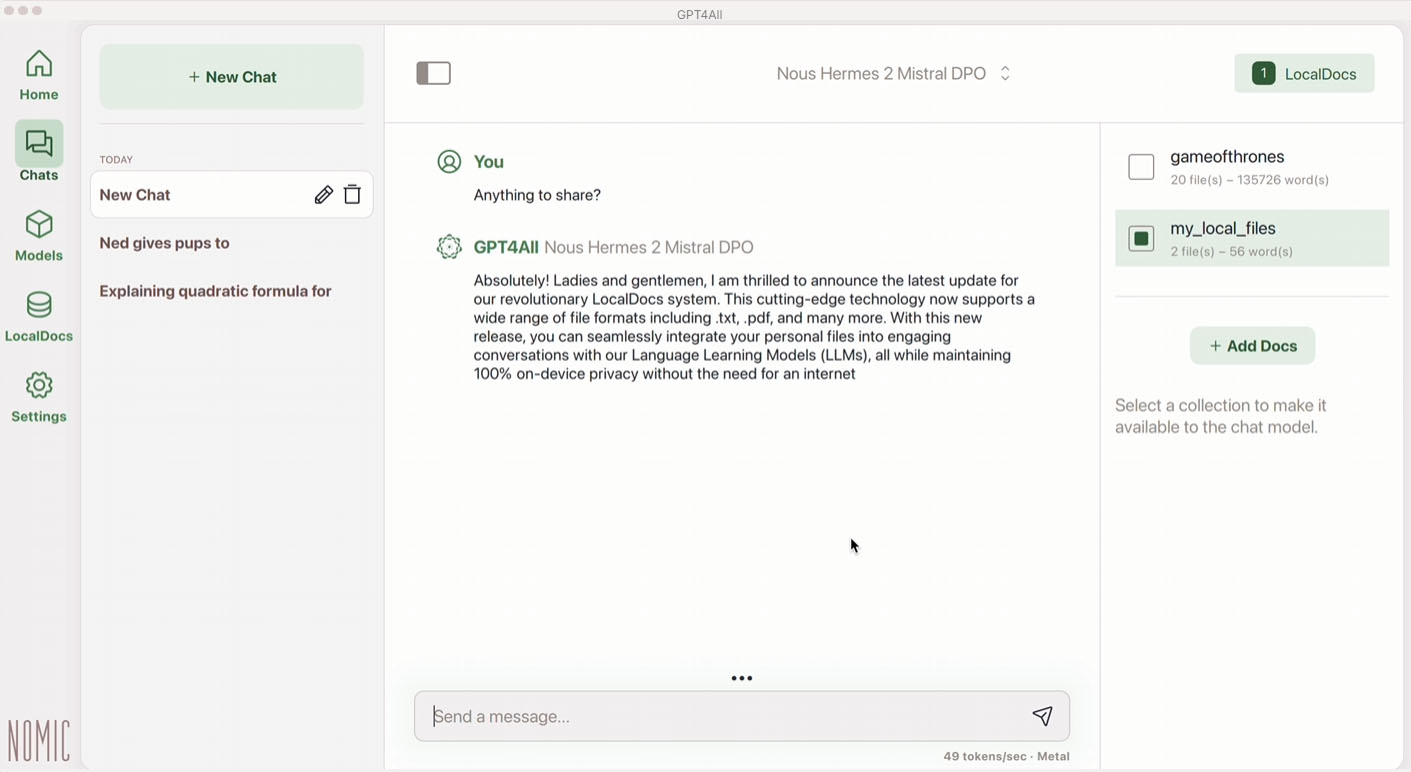

GPT4All

GPT4All provides about a units, a easy user interface and the option of reading by yourself files. The different of chat units is smaller than with Revengeas an illustration, nevertheless the model different is clearer. Extra units can even additionally be downloaded thru Huggingface.com.

The GPT4All chatbot is a solid entrance pause that presents a honest appropriate different of AI units and can load more from Huggingface.com. The user interface is effectively structured and likewise it’s seemingly you’ll mercurial to find your design around.

GPT4All

Set up: Snappy and uncomplicated

The installation of GPT4All became as soon as rapid and uncomplicated for us. AI units can even additionally be chosen below “Items.” Items honest like Llama 3 8B, Llama 3.2 3B, Microsoft Phi 3 Mini, and EM German Mistral are presented.

True: For every model, the quantity of free RAM the PC need to absorb for the model to bustle is specified. There might per chance per chance be get entry to to AI units at Huggingface.com the expend of the quest feature. In addition, the internet units from Delivery AI (ChatGPT) and Mistral can even additionally be built-in thru API keys — for folks that don’t factual need to chat in the community.

Operation and chat

The user interface of GPT4All is similar to that of Msty, nevertheless with fewer functions and alternate suggestions. This makes it more uncomplicated to expend. After a rapid orientation phase, whereby it’s clarified how units can even additionally be loaded and where they are able to even additionally be chosen for the chat, operation is unassuming.

Have files can even additionally be made available to the AI units thru “Localdocs.” In distinction to Msty, it’s no longer seemingly to station which embedding model prepares the records. The Nomic-embed-textv1.5 model is aged in all cases.

In our tests, the tool ran with lovely steadiness. Nonetheless, it became as soon as no longer always certain whether or no longer a model became as soon as already entirely loaded.

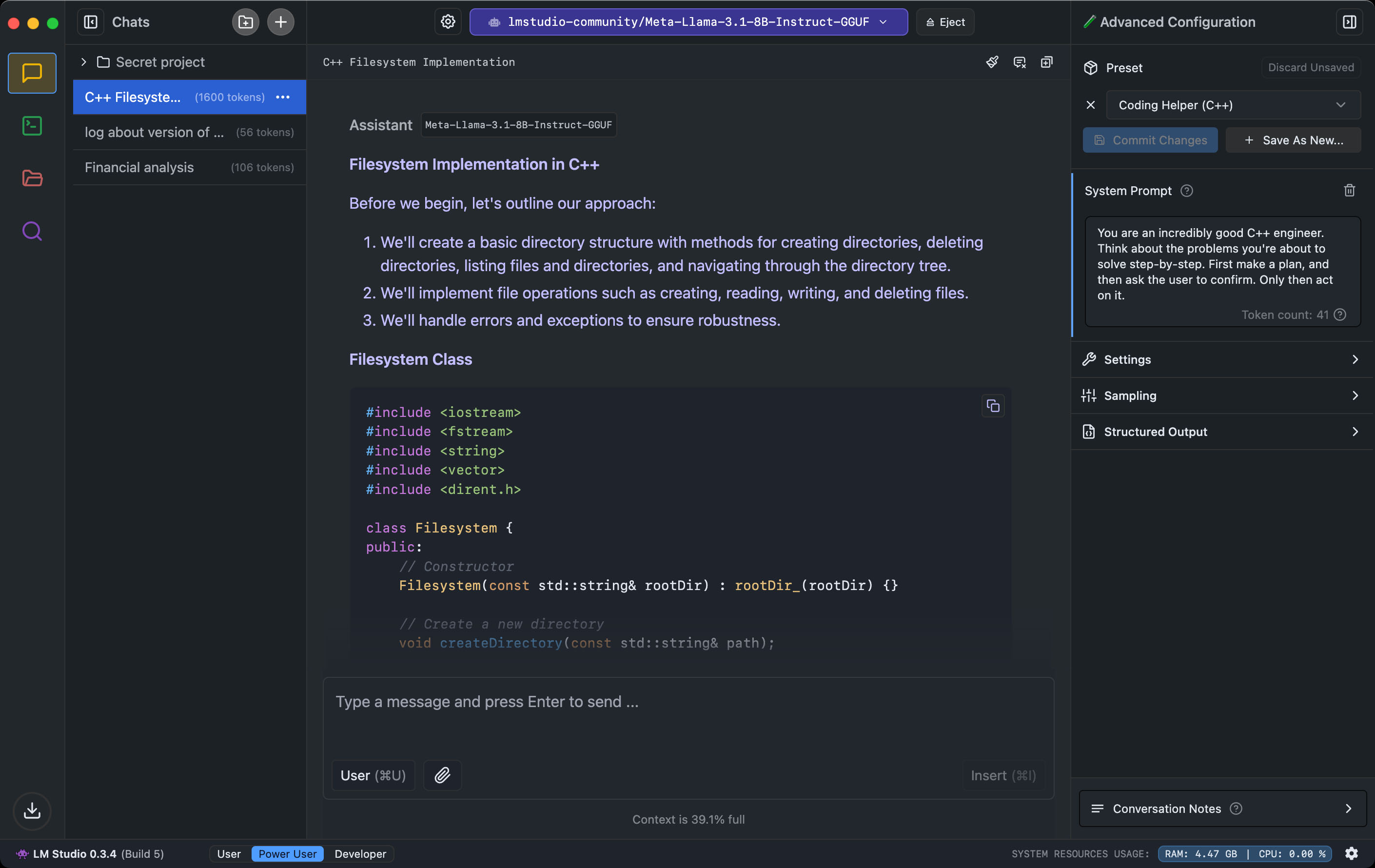

LM Studio

LM Studio provides user steering for novices, evolved users, and developers. Despite this categorization, it’s aimed more at mavens than novices. What the mavens fancy is that anyone working with LM Studio no longer supreme has get entry to to many units, nevertheless also to their alternate suggestions.

The LM Studio chatbot no longer supreme affords you get entry to to a gargantuan different of AI units from Huggingface.com, nevertheless also helps you to resplendent-tune the AI units. There is a separate developer look for for this.

LM Studio

Easy installation

After installation, LM Studio greets you with the “Gather your first LLM” button. Clicking on it provides a extremely shrimp version of Meta’s LLM: Llama 3.2 1B.

This model need to also bustle on older hardware with out prolonged waiting times. After downloading the model, it ought to be started thru a pop-up window and “Load Mannequin.” Extra units can even additionally be added the expend of the Ctrl-Shift-M key aggregate or the “Spy” magnifying glass image, as an illustration.

Chat and integrate paperwork

At the bottom of the LM Studio window, it’s seemingly you’ll trade the quest for of the program the expend of the three buttons “Person,” “Energy Person,” and “Developer.”

Within the first case, the user interface is similar to that of ChatGPT in the browser; in the other two cases, the quest for is supplemented with extra files, honest like what number of tokens are contained in a response and how mercurial they were calculated.

This and the get entry to to many shrimp print of the AI units fetch LM Studio particularly attention-grabbing for evolved users. You might per chance per chance per chance well well fetch many resplendent modifications and look for files.

Your non-public texts can supreme be built-in trusty into a chat, nevertheless can’t be made permanently available to the language units. Whenever you add a yarn to your chat, LM Studio automatically decides whether or no longer it’s rapid ample to suit entirely into the AI model’s rapid or no longer.

If no longer, the yarn is checked for notable announce material the expend of Retrieval Augmented Expertise (RAG), and supreme this announce material is equipped to the model in the chat. Nonetheless, the textual announce material is in overall no longer captured in rotund.

This article before the total lot regarded on our sister publication PC-WELT and became as soon as translated and localized from German.

Author: Arne ArnoldContributor, PCWorld

Arne Arnold has been working in the IT trade for over 30 years, most of that point with a focal point on IT security. He tests antivirus tool, affords guidelines on easy programs to fetch Home windows more salvage, and is always taking a look for for per chance the most uncomplicated security instruments for Home windows. He’s for the time being attempting out new AI instruments and questioning what they mean for our future.