Serving tech fanatics for over 25 years.

TechSpot approach tech prognosis and advice you can belief.

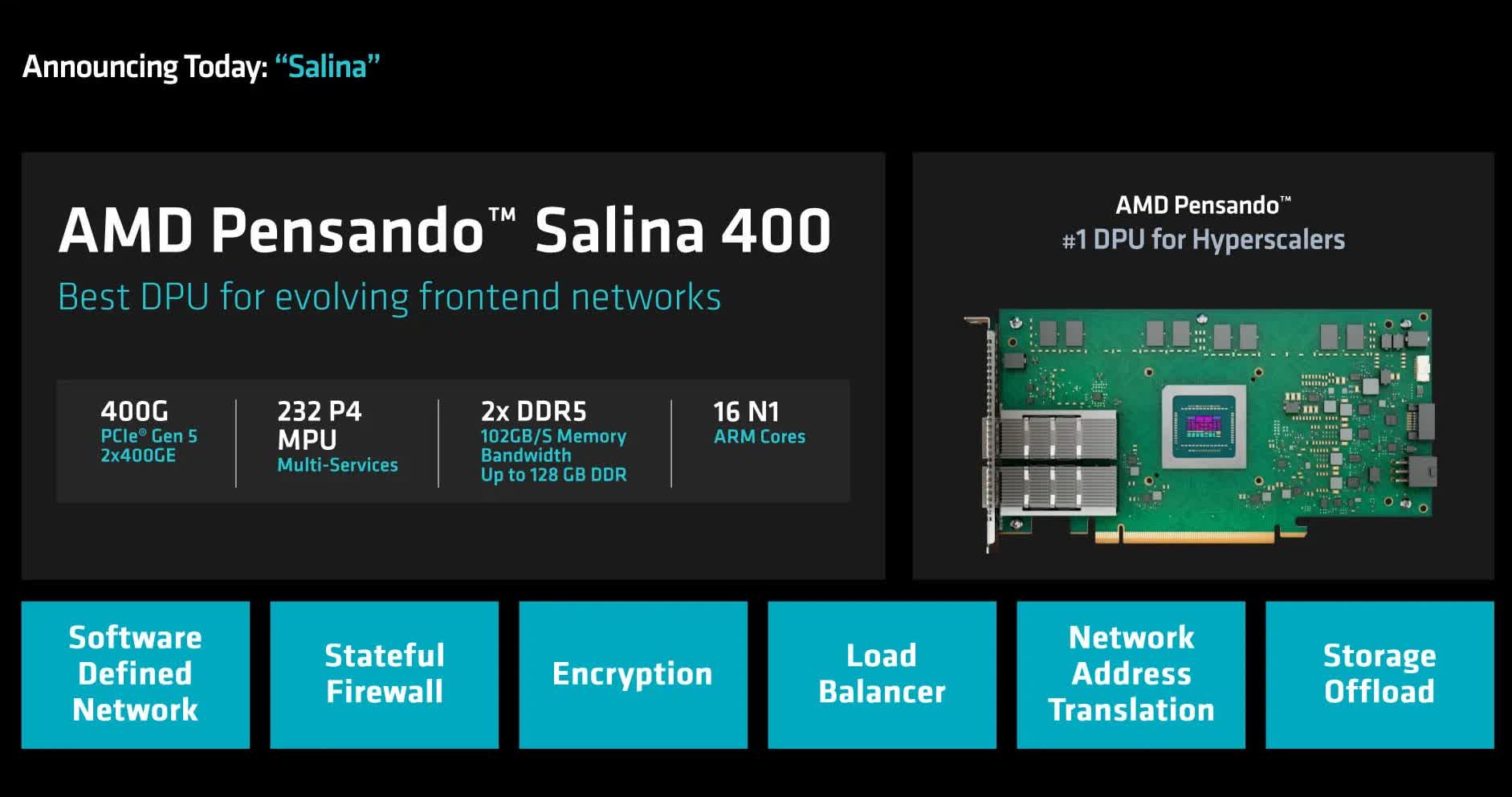

Cutting corners: As AI models proceed to grow in size and complexity, the want for tailored networking solutions is popping into increasingly extra severe. AMD’s introduction of the Pollara 400 underscores the rising significance of in fact just correct hardware within the AI ecosystem.

AMD has unveiled the Thinking Pollara 400a entirely programmable 400 Gigabit per second (Gbps) RDMA Ethernet-ready network interface card (NIC) designed to present a enhance to AI cluster networking.

The upward thrust of generative AI and LLMs has exposed severe shortcomings in traditional Ethernet networks. These evolved AI models require intense verbal replace capabilities, including tightly coupled parallel processing, immediate files transfers, and low-latency verbal replace. Atypical Ethernet, before all the issues designed for traditional-motive computing, has struggled to fulfill these in fact just correct wants.

And but Ethernet stays basically the most successfully favored replacement for AI cluster networking because of its frequent adoption. Nonetheless, the rising hole between Ethernet’s capabilities and the requires of AI workloads has modified into increasingly extra evident.

AMD says its Pensando Pollara 400 is particularly designed to optimize files switch internal assist-dwell AI networks, with a explicit focal point on GPU-to-GPU verbal replace. In step with AMD, the Pollara 400 can converse as much as a sixfold efficiency enhance for AI workloads when put next with broken-down Ethernet solutions.

The Pollara 400 is engineered to tackle the verbal replace patterns of AI workloads, offering high throughput for the duration of all accessible links, reduced tail latency, scalable efficiency, and faster job completion cases.

As an instance, the cardboard makes exercise of wise multipathing to dynamically distribute files packets for the duration of optimal routes, battling network congestion. Its programmable hardware pipeline permits customization and optimization of network processes, while its programmable RDMA transport enhances far-off pronounce reminiscence salvage admission to capabilities. Additionally, the Pollara 400 quickens verbal replace libraries steadily broken-down in AI workloads.

AMD is releasing the Pollara 400 even supposing the Ultra Ethernet initiative has delayed the liberate of the version 1.0 specification from the third quarter of this one year to the predominant quarter of 2025.

BOOM! AMD enters the assist dwell AI network game with a UEC (UltraEthernet) NIC. Did no longer search files from this one, but tag the Nvidia complete solutions posture has to pressure this. Will want to invent some work to salvage underneath this. CC @WillTownTech @DellTech CTO on stage discussing. $AMD… pic.twitter.com/LBwGt34zWz

– Patrick Moorhead (@PatrickMoorhead) October 10, 2024

This sleek traditional targets to scale Ethernet technology to fulfill the efficiency and characteristic necessities of AI and HPC workloads. It’s designed to support as much of the contemporary Ethernet technology as doable while introducing profiles tailored to the explicit wants of AI and HPC, which, though connected, comprise certain necessities.

AMD’s NIC is scheduled to start up sampling within the fourth quarter of 2024 and might maybe very successfully be commercially accessible within the predominant half of of 2025, for the duration of the time the UEC 1.0 specification is launched.