Researcher feeds veil recordings into Gemini to extract staunch recordsdata with ease.

No longer too long in the past, AI researcher Simon Willison wanted to add up his payments from the utilization of a cloud carrier, however the cost values and dates he wanted were scattered among a dozen separate emails. Inputting them manually would were leisurely, so he grew to alter into to a approach he calls “video scraping,” which contains feeding a veil recording video into an AI model, equivalent to ChatGPT, for recordsdata extraction functions.

What he learned appears straightforward on its ground, however the quality of the outcome has deeper implications for the manner forward for AI assistants, that could per chance per chance soon be able to search and engage with what we’re doing on our computer monitors.

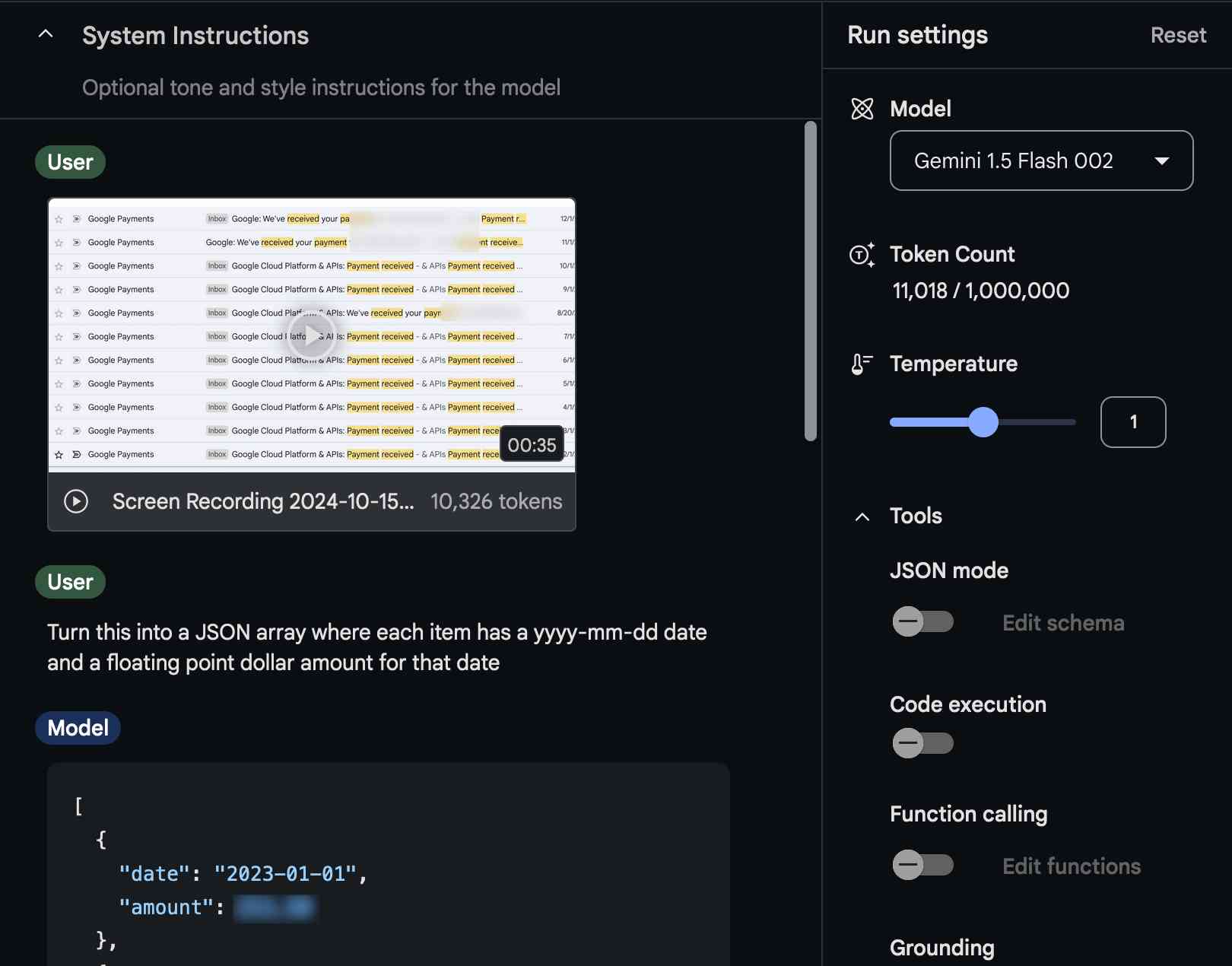

“The other day I found myself needing to add up some numeric values that were scattered across twelve different emails,” Willison wrote in a”https://simonwillison.net/2024/Oct/17/video-scraping/”>detailed post on his weblog. He recorded a 35-2nd video scrolling by plan of the connected emails, then fed that video into Google’s AI Studio machine, which permits folks to experiment with a complete lot of variations of Google’s Gemini 1.5 Skilled and Gemini 1.5 Flash AI gadgets.

Willison then requested Gemini to tug the cost recordsdata from the video and arrange it staunch into a varied recordsdata format called JSON (JavaScript Object Notation) that integrated dates and dollar amounts. The AI model efficiently extracted the guidelines, which Willison then formatted as CSV (comma-separated values) desk for spreadsheet use. After double-checking for errors as fragment of his experiment, the accuracy of the outcomes—and what the video diagnosis cost to skedaddle—shocked him.

A screenshot of Simon Willison the utilization of Google Gemini to extract recordsdata from a veil capture video.

A screenshot of Simon Willison the utilization of Google Gemini to extract recordsdata from a veil capture video. Credit: Simon Willison

“The cost [of running the video model] is so low that I had to re-run my calculations three times to make sure I hadn’t made a mistake,” he wrote. Willison says your complete video diagnosis task ostensibly cost decrease than one-tenth of a cent, the utilization of factual 11,018 tokens on the Gemini 1.5 Flash 002 model. Within the end, he no doubt paid nothing because Google AI Studio is currently free for some forms of use.

Video scraping is factual one in every of many original tips that you need to maintain of when the latest mountainous language gadgets (LLMs), much like Google’s Gemini and GPT-4oare no doubt “multimodal” gadgets, permitting audio, video, image, and textual articulate material input. These gadgets translate any multimedia input into tokens (chunks of recordsdata), which they use to manufacture predictions about which tokens could per chance per chance moreover silent attain next in a chain.

A length of time take care of “token prediction model” (TPM) would be more staunch than “LLM” in the intervening time for AI gadgets with multimodal inputs and outputs, however a generalized substitute length of time hasn’t no doubt taken off but. Nonetheless no matter what you call it, having an AI model that could per chance take video inputs has consuming implications, each very most consuming and doubtlessly improper.

Breaking down input barriers

Willison is removed from the necessary person to feed video into AI gadgets to enact consuming outcomes (more on that beneath, and here’s a 2015 paper that uses the “video scraping” length of time), however as soon as Gemini launched its video input capacity, he began to experiment with it in earnest.

In February, Willison”https://simonwillison.net/2024/Feb/21/gemini-pro-video/”>demonstrated one other early utility of AI video scraping on his weblog, where he took a seven-2nd video of the books on his bookshelves, then bought Gemini 1.5 Skilled to extract the total e book titles it noticed in the video and put them in a structured, or organized, list.

Changing unstructured recordsdata into structured recordsdata is essential to Willison, because he’s moreover a recordsdata journalist. Willison has created instruments for recordsdata journalists in the previous, such because the Datasette missionwhich lets someone post recordsdata as an interactive net articulate material.

To each recordsdata journalist’s frustration, some sources of recordsdata prove proof against scraping (shooting recordsdata for diagnosis) attributable to how the guidelines is formatted, kept, or presented. In these circumstances, Willison delights in the aptitude for AI video scraping because it bypasses these odd barriers to recordsdata extraction.

“There’s no level of website authentication or anti-scraping technology that can stop me from recording a video of my screen while I manually click around inside a web application,” Willison famed on his weblog. His plan works for any visible on-veil articulate material.

Video is the original textual articulate material

An illustration of a cybernetic eyeball. Credit: Getty Photography

The benefit and effectiveness of Willison’s approach contemplate a indispensable shift now underway in how some customers will engage with token prediction gadgets. As an alternative of requiring a person to manually paste or type in recordsdata in a chat dialog—or detail each scenario to a chatbot as textual articulate material—some AI applications an increasing selection of work with visual recordsdata captured at once on the veil. Shall we deliver, whereas you happen to are having danger navigating a pizza net articulate material’s awful interface, an AI model could per chance per chance moreover step in and make the necessary mouse clicks to present an rationalization for the pizza for you.

Truly, video scraping is already on the radar of every necessary AI lab, even in the occasion that they don’t appear to be more likely to call it that for the time being. As a substitute, tech companies typically search advice from these tactics as “video understanding” or merely “vision.”

In Might per chance moreover just, OpenAI demonstrated a prototype version of its ChatGPT Mac App with an likelihood that allowed ChatGPT to search and engage with what’s on your veil, however that operate has no longer but shipped. Microsoft demonstrated a equivalent “Copilot Vision” prototype thought earlier this month (constant with OpenAI’s technology) that shall be able to “watch” your veil and enable you extract recordsdata and engage with applications you are working.

Despite these analysis previews, OpenAI’s ChatGPT and Anthropic’s Claude possess no longer but implemented a public video input operate for his or her gadgets, presumably because it in all equity computationally costly for them to task the additional tokens from a “tokenized” video stream.

For the moment, Google is heavily subsidizing person AI payments with its battle chest from Search income and a huge immediate of recordsdata facilities (to be very most consuming, OpenAI is subsidizing, too, however with investor greenbacks and lend a hand from Microsoft). Nonetheless payments of AI compute in frequent are dropping by the day, which can start up original capabilities of the technology to a broader person infamous over time.

Countering privacy points

As that you need to per chance take into account, having an AI model stumble on what you lift out on your computer veil can possess downsides. For now, video scraping is mammoth for Willison, who will undoubtedly use the captured recordsdata in certain and functional ways. Nonetheless it’s moreover a preview of a capacity that could per chance per chance moreover later be extinct to invade privacy or autonomously glimpse on computer customers on a scale that was once not likely.

A good manufacture of video scraping caused a huge wave of controversy as we enlighten for that particular reason. Apps such because the third-occasion Rewind AI on the Mac and Microsoft’s Recallwhich is being built into Windows 11, operate by feeding on-veil video into an AI model that stores extracted recordsdata staunch into a database for later AI recall. Sadly, that manner moreover introduces ability privacy points because it recordsdata all the pieces you lift out on your machine and places it in a single jam that could per chance per chance moreover later be hacked.

To that point, although Willison’s approach currently contains uploading a video of his recordsdata to Google for processing, he’s elated that he can silent attain to a dedication what the AI model sees and when.

“The great thing about this video scraping technique is that it works with anything that you can see on your screen… and it puts you in total control of what you end up exposing to the AI model,” Willison outlined in his weblog post.

Or no longer it’s moreover that you need to maintain of in due course that a in the community skedaddle start-weights AI model could per chance per chance moreover pull off the same video diagnosis plan without the necessity for a cloud connection at all. Microsoft Recall runs in the community on supported gadgets, nonetheless it silent calls for a mammoth deal of unearned have faith. For now, Willison is completely articulate material to selectively feed video recordsdata to AI gadgets when the necessity arises.

“I expect I’ll be using this technique a whole lot more in the future,” he wrote, and presumably many others will, too, in varied forms. If the previous is any indication, Willison—who coined the length of time “prompt injection” in 2022—appears to frequently be about a steps forward in exploring original applications of AI instruments. Ultimate now, his consideration is on the original implications of AI and video, and yours doubtlessly could per chance per chance moreover silent be, too.

Benj Edwards is Ars Technica’s Senior AI Reporter and founder of the positioning’s dedicated AI beat in 2022. He’s moreover a tech historian with nearly two a long time of abilities. In his free time, he writes and recordsdata song, collects traditional computer methods, and enjoys nature. He lives in Raleigh, NC.