No longer up to 2 weeks in the past, a scarcely identified Chinese language company released its most modern

DeepSeek claimed in a technical paper uploaded to

The market response to the suggestions on Monday became as soon as engrossing and brutal: As DeepSeek rose to remodel the

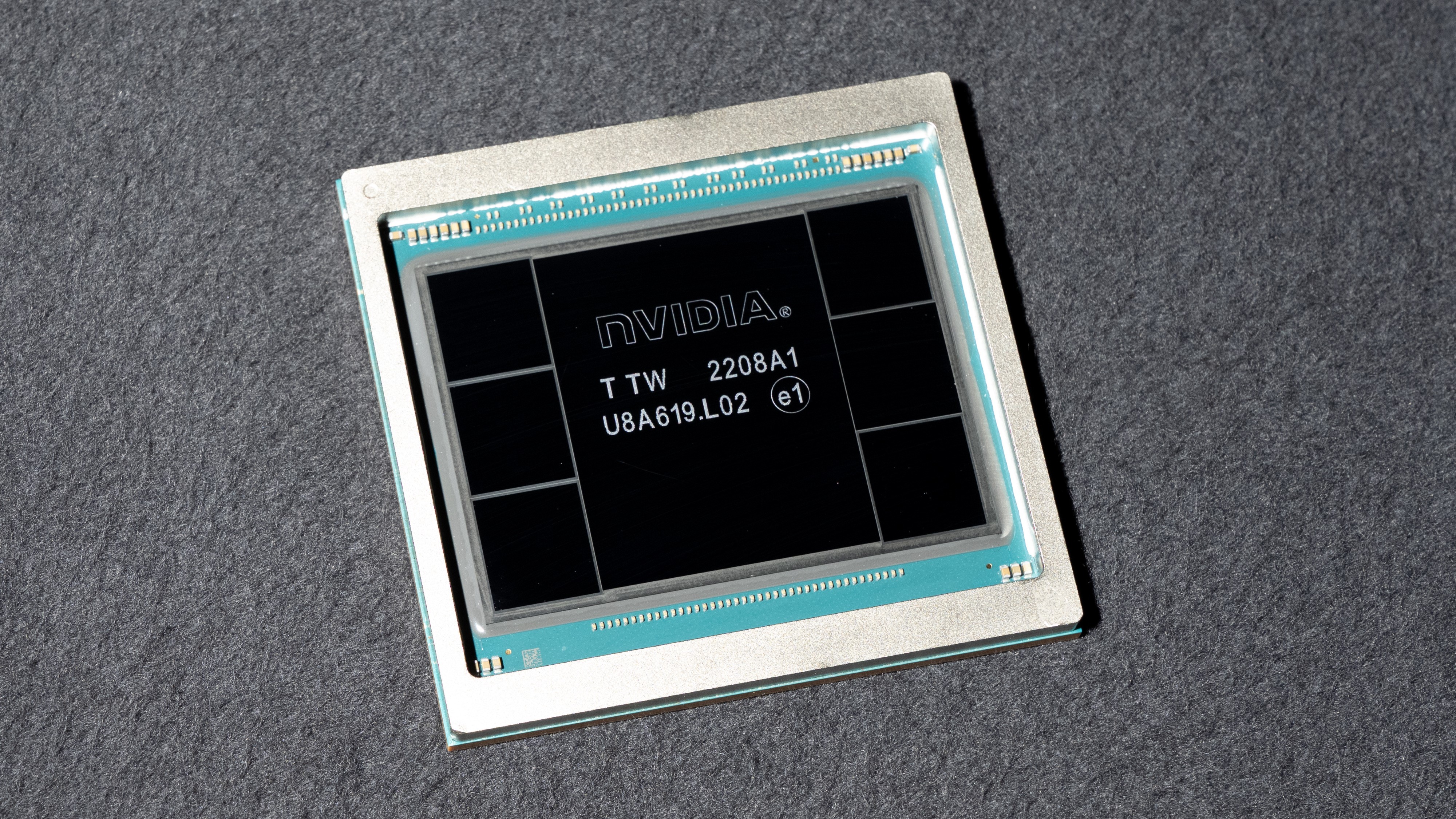

And Nvidia, an organization that makes high-dwell H100 graphics chips presumed a need to have for AI coaching, misplaced $589 billion in valuation in the

Connected:

AI consultants impart that DeepSeek’s emergence has upended a key dogma underpinning the industry’s means to growth — exhibiting that bigger is no longer always better.

“The fact that DeepSeek could be built for less money, less computation and less time and can be run locally on less expensive machines, argues that as everyone was racing towards bigger and bigger, we missed the opportunity to build smarter and smaller,”

Salvage the enviornment’s most charming discoveries delivered straight to your inbox.

But what makes DeepSeek’s V3 and R1 models so disruptive? Basically the critical, scientists impart, is efficiency.

What makes DeepSeek’s models tick?

“In some ways, DeepSeek’s advances are more evolutionary than revolutionary,”

If we rob DeepSeek’s claims at face payment, Tewari said, the principle innovation to the company’s means is the draw in which it wields its clear and highly efficient models to trudge correct as well to varied programs whereas the utilization of fewer sources.

Key to that is a “mixture-of-experts” intention that splits DeepSeek’s models into submodels each that specialise in a squawk assignment or data kind. Here is accompanied by a load-bearing intention that, rather then making employ of an overall penalty to slack an overburdened intention indulge in varied models develop, dynamically shifts initiatives from overworked to underworked submodels.

“[This] means that even though the V3 model has 671 billion parameters, only 37 billion are actually activated for any given token,” Tewari said. A token refers to a processing unit in a clear language model (LLM), identical to a bit of text.

Furthering this load balancing is a methodology identified as “inference-time compute scaling,” a dial within DeepSeek’s models that ramps distributed computing up or down to compare the complexity of an assigned assignment.

This efficiency extends to the coaching of DeepSeek’s models, which consultants cite as an unintended of U.S. export restrictions.

A more ambiance pleasant form of clear language model

The must employ these less-highly efficient chips compelled DeepSeek to create one other predominant step forward: its blended precision framework. As yet one more of representing all of its model’s weights (the numbers that announce the strength of the connection between an AI model’s synthetic neurons) the utilization of 32-bit floating level numbers (FP32), it educated a capabilities of its model with less-accurate 8-bit numbers (FP8), switching most attention-grabbing to 32 bits for more durable calculations the put accuracy matters.

“This allows for faster training with fewer computational resources,”

In an analogous fashion, whereas it is standard to coach AI models the utilization of human-offered labels to attain the accuracy of answers and reasoning, R1’s reasoning is unsupervised. It uses most attention-grabbing the correctness of last answers in initiatives indulge in math and coding for its reward signal, which frees up coaching sources to be ancient in varied areas.

All of this provides up to a startlingly ambiance pleasant pair of models. While the coaching charges of DeepSeek’s competitors trudge into the

Cao is careful to present that DeepSeek’s study and construction, which entails its hardware and a huge assortment of trial-and-error experiments, means it nearly definitely spent critical greater than this $5.58 million figure. Nonetheless, it is restful a fundamental sufficient fall in payment to have caught its competitors flat-footed.

Total, AI consultants impart that DeepSeek’s recognition is probably going a earn scuttle for the industry, bringing exorbitant handy resource charges down and reducing the barrier to entry for researchers and corporations. It can perchance perchance also moreover personal condominium for more chipmakers than Nvidia to enter the stride. But it also comes with its possess dangers.

“As cheaper, more efficient methods for developing cutting-edge AI models become publicly available, they can allow more researchers worldwide to pursue cutting-edge LLM development, potentially speeding up scientific progress and application creation,” Cao said. “At the same time, this lower barrier to entry raises new regulatory challenges — beyond just the U.S.-China rivalry — about the misuse or potentially destabilizing effects of advanced AI by state and non-state actors.”

Ben Turner is a U.Okay. basically based mostly team author at Are dwelling Science. He covers physics and astronomy, among varied subject matters indulge in tech and climate change. He graduated from College College London with a stage in particle physics sooner than coaching as a journalist. When he’s no longer writing, Ben enjoys reading literature, taking part in the guitar and embarrassing himself with chess.

More about synthetic intelligence