Over the last few years, Google has launched into a quest to jam generative AI into every product and initiative likely. Google has robots summarizing search results, interacting with your apps, and inspecting the details to your telephone. And rarely, the output of generative AI programs will also be surprisingly proper in spite of lacking any proper knowledge. However can they attain science?

Google Learn is now angling to turn AI trusty into a scientist—well, a “co-scientist.” The firm has a new multi-agent AI machine primarily based totally on Gemini 2.0 aimed at biomedical researchers that can supposedly level the procedure in which in direction of new hypotheses and areas of biomedical research. Alternatively, Google’s AI co-scientist boils all of the procedure in which down to a enjoy chatbot.

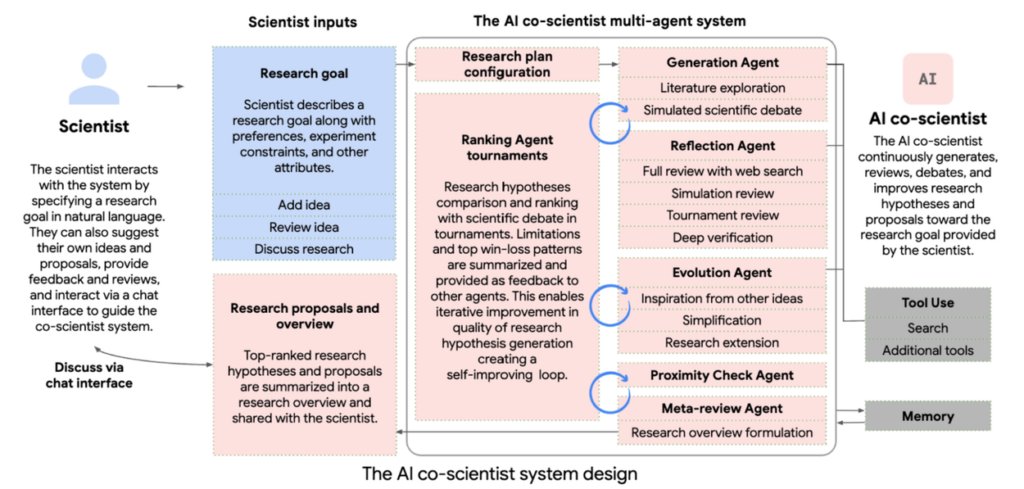

A flesh-and-blood scientist the usage of Google’s co-scientist would input their research desires, tips, and references to previous research, permitting the robot to generate likely avenues of research. The AI co-scientist contains quite lots of interconnected fashions that churn by the input data and entry Internet sources to refine the output. For the length of the tool, different brokers topic every different to originate a “self-improving loop,” which is the same to the brand new raft of reasoning AI fashions enjoy Gemini Flash Pondering and OpenAI o3.

Here is still a generative AI machine enjoy Gemini, so it would no longer indubitably fill any new tips or knowledge. Alternatively, it can extrapolate from existing data to potentially create first rate suggestions. On the end of the process, Google’s AI co-scientist spits out research proposals and hypotheses. The human scientist also can refer to the robot referring to the proposals in a chatbot interface.

The construction of Google’s AI co-scientist.

Which that you would possibly furthermore rep the AI co-scientist as a highly technical originate of brainstorming. The same procedure you would possibly be in a build of residing to soar gain collectively-planning tips off a user AI mannequin, scientists will be in a build of residing to conceptualize new scientific research with an AI tuned specifically for that cause.

Testing AI science

On the fresh time’s well-liked AI programs fill a well-identified concern with accuracy. Generative AI repeatedly has one thing to recount, although the mannequin would no longer fill the loyal practising data or mannequin weights to be helpful, and reality-checking with extra AI fashions can not work miracles. Leveraging its reasoning roots, the AI co-scientist conducts an inner evaluation to pork up outputs, and Google says the self-evaluation ratings correlate to elevated scientific accuracy.

The inner metrics are one component, but what attain proper scientists deem? Google had human biomedical researchers steal into consideration the robot’s proposals, and they reportedly rated the AI co-scientist elevated than different, much less specialized agentic AI programs. The consultants furthermore agreed the AI co-scientist’s outputs showed elevated capacity for affect and novelty in comparison with commonplace AI fashions.

This would no longer suggest the AI’s suggestions are all proper. Alternatively, Google partnered with quite lots of universities to ascertain one of the well-known AI research proposals in the laboratory. As an illustration, the AI advised repurposing obvious medication for treating acute myeloid leukemia, and laboratory sorting out advised it was once a viable notion. Learn at Stanford University furthermore showed that the AI co-scientist’s tips about therapy for liver fibrosis were worthy of further glimpse.

Here is compelling work, indubitably, but calling this methodology a “co-scientist” is per chance somewhat grandiose. Irrespective of the insistence from AI leaders that we’re on the verge of constructing residing, pondering machines, AI is no longer for all time if reality be told any place shut to being in a build of residing to achieve science by itself. That would no longer suggest the AI-co-scientist would possibly perchance no longer be priceless, though. Google’s new AI would possibly furthermore wait on humans clarify and contextualize immense data sets and bodies of research, although it can not stamp or supply correct insights.

Google says it desires extra researchers working with this AI machine in the hope it can abet with proper research. researchers and organizations can apply to be allotment of the Depended on Tester programwhich presents entry to the co-scientist UI to boot to an API that can also be constructed-in with existing instruments.