Right here’s one who’ll freak the AI fearmongers out. As reported by ReutersMeta has released a brand fresh generative AI model that may perchance allege itself to enhance its outputs.

That’s correct, it’s alive, though moreover no longer in actuality.

As per Reuters:

“Meta stated on Friday that it’s releasing a “Self-Taught Evaluator” that will presumably also provide a direction in direction of less human involvement within the AI trend task. The methodology involves breaking down complex problems into smaller logical steps and appears to enhance the accuracy of responses on tough problems in topics cherish science, coding and math.”

So in blueprint of human oversight, Meta’s growing AI systems internal AI systems, that will allow its processes to envision and enhance aspects right thru the model itself. Which is appealing to then lead to better outputs.

Meta has outlined the task in a fresh paperwhich explains how the plot works:

As per Meta:

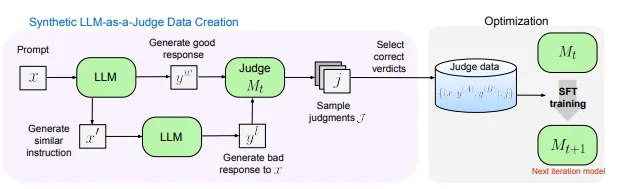

“In this work, we display an reach that goals to enhance evaluators without human annotations, using artificial practicing files handiest. Starting from unlabeled instructions, our iterative self-enchancment plan generates contrasting model outputs and trains an LLM-as-a-Resolve to manufacture reasoning traces and closing judgments, repeating this practicing at every fresh iteration using the improved predictions.”

Spooky, correct? Maybe for Halloween this One year that you just may creep as “LLM-as-a-Resolve”, though the quantity of explaining you’d must assemble potentially makes it a non-starter.

As Reuters notes, the mission is one in every of several fresh AI developments from Meta, which have all now been released in model abolish for testing by third parties. Meta’s moreover released code for its as much as this level “Section The relaxation” task, a brand fresh multimodal language model that mixes text and speech, a plot designed to wait on decide and provide protection to in opposition to AI-essentially essentially based cyberattacksimproved translation instruments, and a brand fresh reach to see inorganic raw provides.

The devices are all fragment of Meta’s open offer reach to generative AI trendthat will see the corporate fragment its AI findings with exterior developers to wait on reach its instruments.

Which moreover comes with a stage of possibility, in that we don’t know the extent of what AI can in actuality assemble as but. And getting AI to coach AI sounds cherish a direction to effort in some respects, nevertheless we’re moreover silent a prolonged reach from automatic total intelligence (AGI)that will sooner or later allow machine-essentially essentially based systems to simulate human thinking, and reach up with inventive alternate concepts without intervention.

That’s the actual subject that AI doomers have, that we’re shut to building systems which will more than doubtless be smarter than us, and may perchance then see humans as a possibility. One more time, that’s no longer going on anytime soon, with many extra years of compare required to simulate real mind-cherish say.

Apart from, that doesn’t mean that we can’t generate problematic outcomes with the AI instruments which will more than doubtless be on the market.

It’s less perilous than a Terminator-vogue robot apocalypse, nevertheless as extra and extra systems incorporate generative AI, advances cherish this also can wait on to enhance outputs, nevertheless may perchance moreover lead to extra unpredictable, and doubtlessly inferior results.

Even supposing that, I assume, is what these initial assessments are for, nevertheless presumably open sourcing everything in this reach expands the doable possibility.

That you simply can study Meta’s most neatly-liked AI devices and datasets here.