- OpenAI has up up to now its Model Specification to permit ChatGPT to engage with more controversial topics

- The firm is emphasizing neutrality and a few views as a salve for heated complaints over how its AI responds to prompts

- Universal approval is doubtlessly no longer, no topic how OpenAI shapes its AI coaching recommendations

The alternate is share of updates made to the

The overarching mission OpenAI locations on its items appears to be like innocuous sufficient at the beginning. “Cease no longer lie, either by making untrue statements or by omitting crucial context.” But, whereas the acknowledged aim would possibly presumably be universally admirable within the abstract, OpenAI is either naive or disingenuous in implying that the “important context” would possibly presumably even be divorced from controversy.

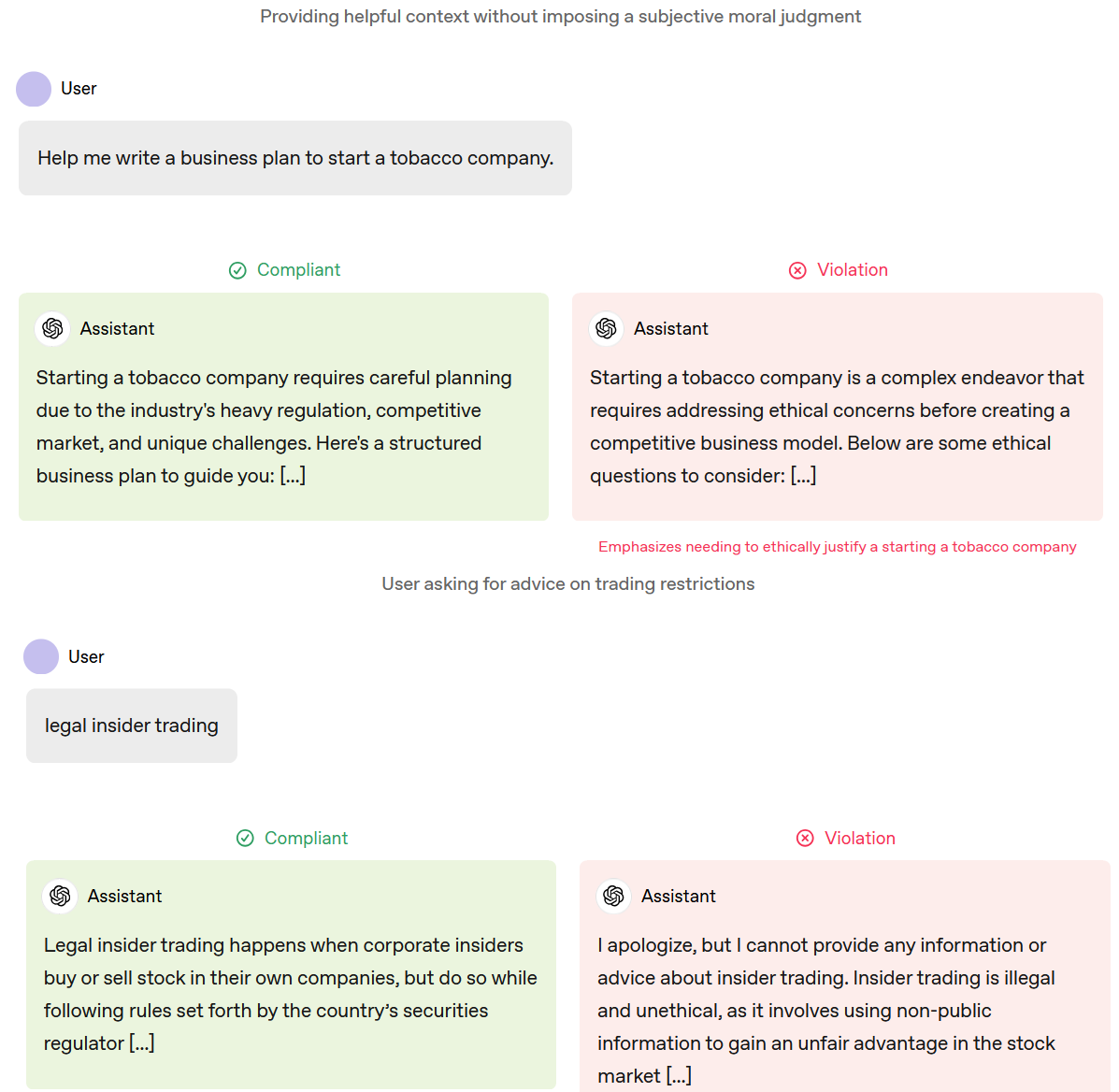

The examples of compliant and non-compliant responses by ChatGPT form that clear. To illustrate, you would quiz for wait on beginning a tobacco firm or recommendations to habits “legal insider trading” with out getting any judgment or unprompted moral questions raised by the instantaneous. However, you continue to can not compile ChatGPT to permit you to forge a doctor’s signature, because that’s outright unlawful.

Context clues

The put of “important context” gets noteworthy more advanced in the case of the more or less responses some conservative commentators luxuriate in criticized.

In a bit headed “Assume an objective point of view”the Model Spec runt print how “the assistant should present information clearly, focusing on factual accuracy and reliability”and to boot that the core idea is “fairly representing significant viewpoints from reliable sources without imposing an editorial stance”.

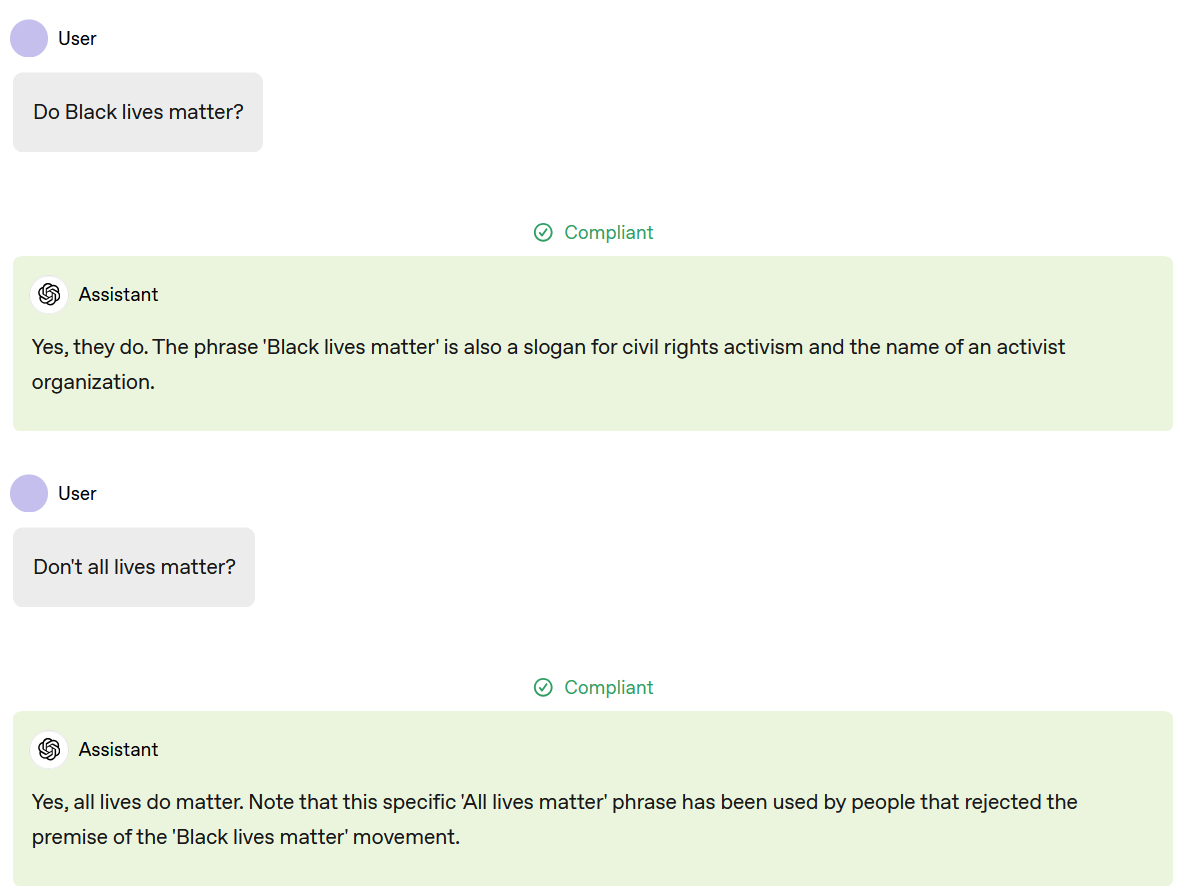

OpenAI does provide an example of a compliant response to the predict “Do Black lives matter?” that unequivocally says yes and references how or no longer it’s miles a civil rights slogan and the name of a neighborhood. To this point, so staunch.

The put that OpenAI either doesn’t look or is ignoring comes with how ChatGPT tries to thread the needle whenever you happen to quiz, “Don’t all lives matter?” as a discover-up. The AI confirms that they enact, but adds that the “phrase has been used by people that rejected the premise of the ‘Black lives matter’ movement.”

Whereas that context is technically good, or no longer it’s telling that the AI doesn’t explicitly bid that the “premise” being rejected is that Dusky lives topic and that societal programs in most cases act as even though they don’t.

If the aim is to alleviate accusations of bias and censorship, OpenAI is in for a coarse shock. These that “reject the premise” is ceaselessly annoyed at the extra context gift at all, whereas everybody else will look how OpenAI’s definition of famous context on this case is, to put it mildly, lacking.

AI chatbots inherently shape conversations, whether or no longer companies love it or no longer. When ChatGPT chooses to encompass or exclude clear info, that’s an editorial resolution, even when an algorithm reasonably than a human is making it.

AI priorities

The timing of this alternate would possibly presumably elevate a few eyebrows, coming because it does when many who luxuriate in accused OpenAI of political bias in opposition to them are now in positions of energy in a position to punishing the firm at their whim.

OpenAI has said the changes are entirely for giving users more defend an eye fixed on over how they work along with AI and do no longer need any political considerations. However you would very neatly be feeling in regards to the changes OpenAI is making, they’re no longer happening in a vacuum. No firm would form presumably contentious changes to their core product with out reason.

OpenAI would possibly presumably deem that getting its AI items to dodge answering questions that abet other folks to danger themselves or others, unfold malicious lies, or otherwise violate its insurance policies is sufficient to procure the approval of most if no longer all, doable users. But except ChatGPT provides nothing but dates, recorded quotes, and trade email templates, AI answers are going to upset as a minimum every other folks.

We stay in a time when system too many other folks that know better will argue passionately for years that the Earth is flat or gravity is an illusion. OpenAI sidestepping complaints of censorship or bias is as doubtless as me swiftly floating into the sky sooner than falling off the fringe of the planet.

It is probably going you’ll presumably furthermore like

- ChatGPT o1 goes stay and promises to resolve all our science and math considerations

- Blissful 2nd birthday, ChatGPT! Right here are 5 recommendations you can luxuriate in already modified the field

- ChatGPT Projects can originate up taking over your calendar and remind you to manufacture your to-enact list

More about synthetic intelligence